diff --git a/.env b/.env

index 46fb6637e2..d11b5afb19 100644

--- a/.env

+++ b/.env

@@ -1,5 +1,4 @@

-

# Images

IMAGE_VERSION=1.6.0

IMAGE_NAME=ghcr.io/open-telemetry/demo

@@ -54,6 +53,10 @@ FEATURE_FLAG_GRPC_SERVICE_ADDR=featureflagservice:${FEATURE_FLAG_GRPC_SERVICE_PO

FRONTEND_PORT=8080

FRONTEND_ADDR=frontend:${FRONTEND_PORT}

+# Nginx Proxy

+NGINX_PORT=90

+NGINX_ADDR=nginx:${FRONTEND_PORT}

+

# Frontend Proxy (Envoy)

FRONTEND_HOST=frontend

ENVOY_PORT=8080

@@ -62,7 +65,7 @@ FRONTEND_PROXY_ADDR=frontend-proxy:${ENVOY_PORT}

# Load Generator

LOCUST_WEB_PORT=8089

LOCUST_USERS=10

-LOCUST_HOST=http://${FRONTEND_PROXY_ADDR}

+LOCUST_HOST=http://${NGINX_ADDR}

LOCUST_WEB_HOST=loadgenerator

LOCUST_AUTOSTART=true

LOCUST_HEADLESS=false

@@ -113,3 +116,16 @@ JAEGER_SERVICE_HOST=jaeger

PROMETHEUS_SERVICE_PORT=9090

PROMETHEUS_SERVICE_HOST=prometheus

PROMETHEUS_ADDR=${PROMETHEUS_SERVICE_HOST}:${PROMETHEUS_SERVICE_PORT}

+

+# OpenSearch versionins

+OPENSEARCH_VERSION=2.11.0

+

+# OpenSearch Node1

+OPENSEARCH_PORT=9200

+OPENSEARCH_HOST=opensearch

+OPENSEARCH_ADDR=${OPENSEARCH_HOST}:${OPENSEARCH_PORT}

+

+# OpenSearch Dashboard

+OPENSEARCH_DASHBOARD_PORT=5601

+OPENSEARCH_DASHBOARD_HOST=opensearch-dashboards

+OPENSEARCH_DASHBOARD_ADDR=${OPENSEARCH_DASHBOARD_HOST}:${OPENSEARCH_DASHBOARD_PORT}

\ No newline at end of file

diff --git a/.github/CODEOWNERS b/.github/CODEOWNERS

index 9f88832a65..a0fdedaa94 100644

--- a/.github/CODEOWNERS

+++ b/.github/CODEOWNERS

@@ -1,5 +1,2 @@

-# Code owners file.

-# This file controls who is tagged for review for any given pull request.

-

-# For anything not explicitly taken by someone else:

-* @open-telemetry/demo-approvers

+# OpenSearch-Project: This should match the owning team set up in https://github.com/orgs/opensearch-project/teams

+* @pjfitzgibbons @ps48 @kavithacm @derek-ho @joshuali925 @dai-chen @YANG-DB @rupal-bq @mengweieric @vamsi-amazon @swiddis @penghuo @seankao-az

\ No newline at end of file

diff --git a/.github/README.md b/.github/README.md

new file mode 100644

index 0000000000..dfae22e870

--- /dev/null

+++ b/.github/README.md

@@ -0,0 +1,61 @@

+# + OpenTelemetry Demo with OpenSearch

+

+The following guide describes how to setup the OpenTelemetry demo with OpenSearch Observability using [Docker compose](#docker-compose) or [Kubernetes](#kubernetes).

+

+## Docker compose

+

+### Prerequisites

+

+- Docker

+- Docker Compose v2.0.0+

+- 4 GB of RAM for the application

+

+### Running this demo

+

+```bash

+git clone https://github.com/opensearch-project/opentelemetry-demo.git

+cd opentelemetry-demo

+docker compose up -d

+```

+

+### Services

+

+Once the images are built and containers are started you can access:

+

+- Webstore-Proxy (Via Nginx Proxy): http://nginx:90/ (`nginx` DNS name needs to be added )

+ - [Defined here](https://github.com/opensearch-project/opentelemetry-demo/blob/079750428f1bddf16c029f30f478396e45559fec/.env#L58)

+- Webstore: http://frontend:8080/ (`frontend` DNS name needs to be added )

+ - [Defined here](https://github.com/opensearch-project/opentelemetry-demo/blob/079750428f1bddf16c029f30f478396e45559fec/.env#L63)

+- Dashboards: http://dashboards:5061/ (`dashboards` DNS name needs to be added )

+- Feature Flags UI: http://featureflag:8881/ (`featureflag` DNS name needs to be added )

+ - [Defined here](https://github.com/opensearch-project/opentelemetry-demo/blob/079750428f1bddf16c029f30f478396e45559fec/.env#LL47C31-L47C31)

+- Load Generator UI: http://loadgenerator:8089/ (`loadgenerator` DNS name needs to be added)

+ - [Defined here](https://github.com/opensearch-project/opentelemetry-demo/blob/079750428f1bddf16c029f30f478396e45559fec/.env#L66)

+

+OpenSearch has [documented](https://opensearch.org/docs/latest/observing-your-data/trace/trace-analytics-jaeger/#setting-up-opensearch-to-use-jaeger-data) the usage of the Observability plugin with jaeger as a trace signal source.

+

+The next instructions are similar and use the same docker compose file.

+1. Start the demo with the following command from the repository's root directory:

+ ```

+ docker compose up -d

+ ```

+**Note:** The docker compose `--no-build` flag is used to fetch released docker images from [ghcr](http://ghcr.io/open-telemetry/demo) instead of building from source.

+Removing the `--no-build` command line option will rebuild all images from source. It may take more than 20 minutes to build if the flag is omitted.

+

+### Explore and analyze the data With OpenSearch Observability

+Review revised OpenSearch [Observability Architecture](architecture.md)

+

+### Start learning OpenSearch Observability using our tutorial

+[Getting started Tutorial](../tutorial/README.md)

+

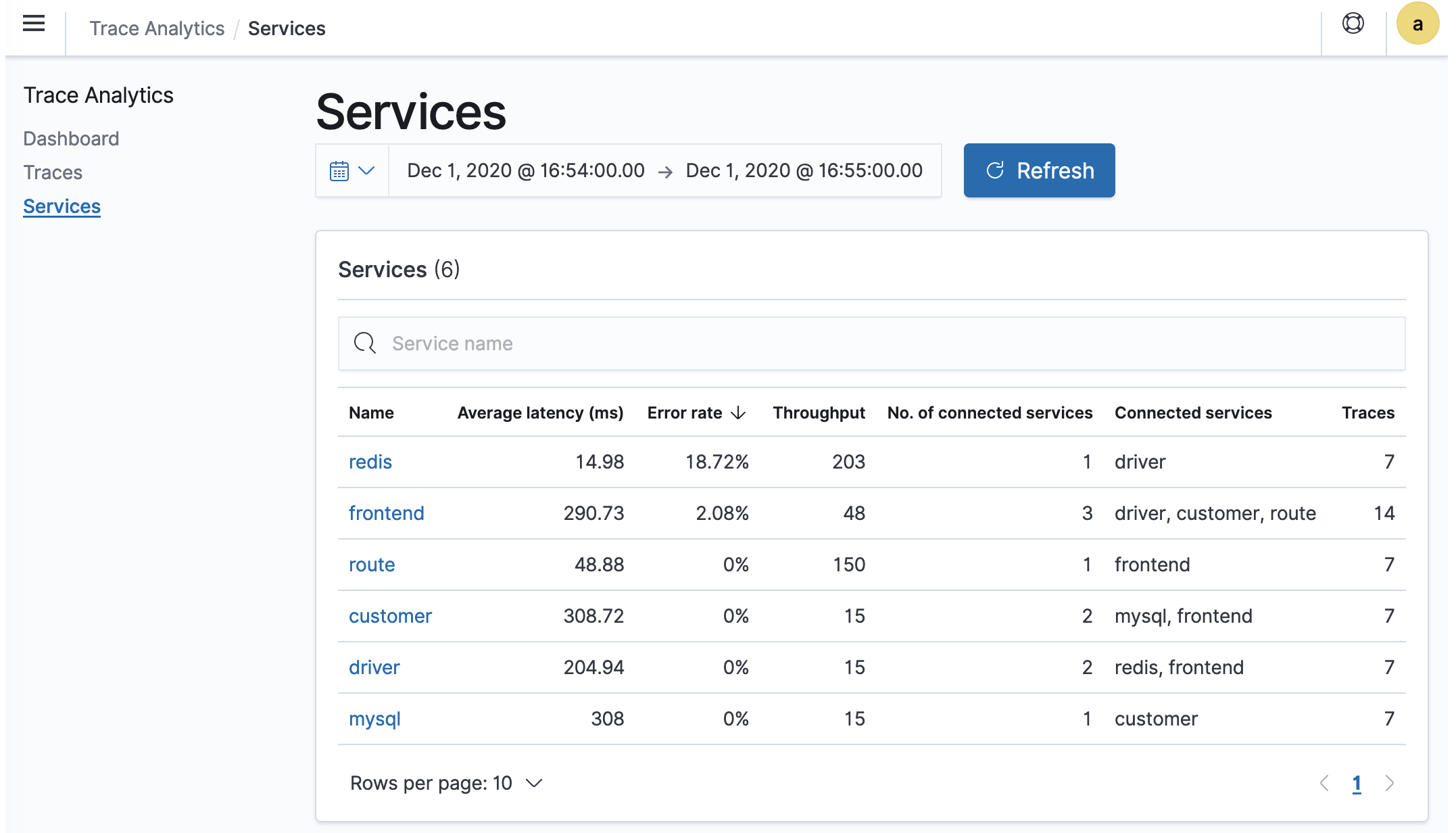

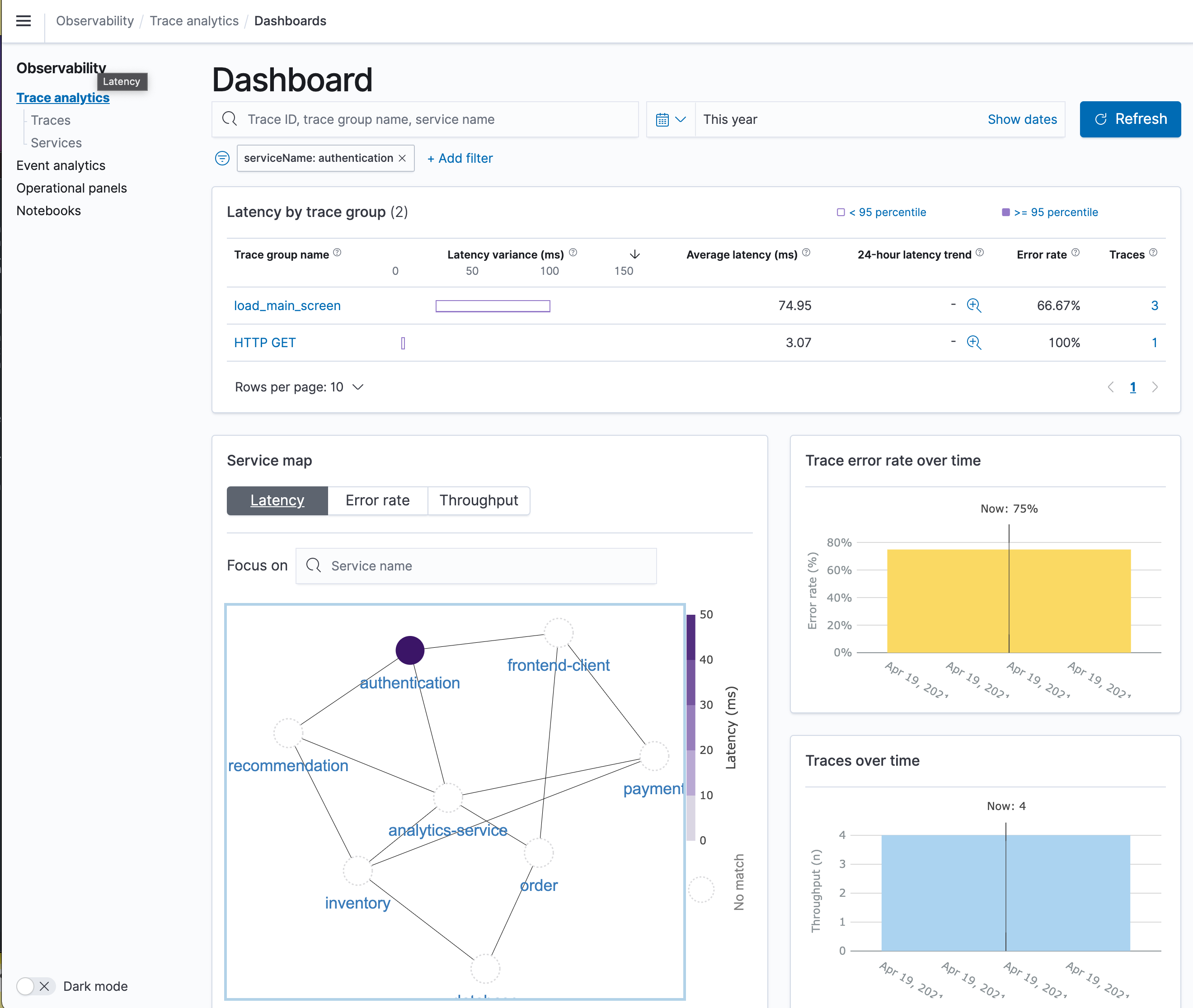

+#### Service map

+

+

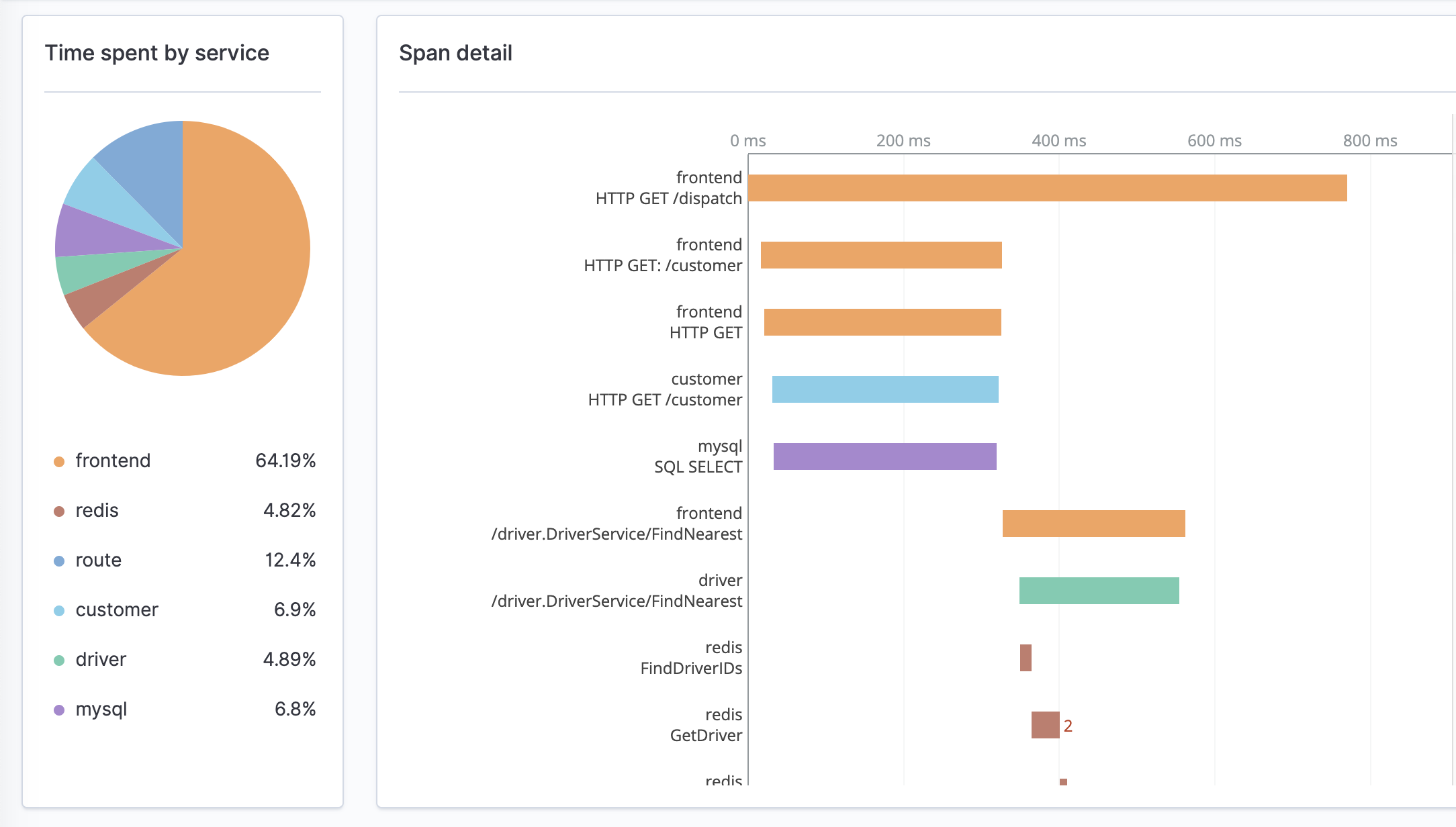

+#### Traces

+

+

+#### Correlation

+

+

+#### Logs

+

diff --git a/.github/architecture.md b/.github/architecture.md

new file mode 100644

index 0000000000..8111d775ab

--- /dev/null

+++ b/.github/architecture.md

@@ -0,0 +1,368 @@

+# Opensearch OTEL Demo Architecture

+This document will review the OpenSearch architecture for the [OTEL demo](https://opentelemetry.io/docs/demo/) and will review how to use the new Observability capabilities

+implemented into OpenSearch.

+---

+This diagram provides an overview of the system components, showcasing the configuration derived from the OpenTelemetry Collector (otelcol) configuration file utilized by the OpenTelemetry demo application.

+

+Additionally, it highlights the observability data (traces and metrics) flow within the system.

+

+

+

+---

+[OTEL DEMO](https://opentelemetry.io/docs/demo/architecture/) Describes the list of services that are composing the Astronomy Shop.

+

+They are combined of:

+ - [Accounting](https://opentelemetry.io/docs/demo/services/accounting/)

+ - [Ad](https://opentelemetry.io/docs/demo/services/ad/)

+ - [Cart](https://opentelemetry.io/docs/demo/services/cart/)

+ - [Checkout](https://opentelemetry.io/docs/demo/services/checkout/)

+ - [Currency](https://opentelemetry.io/docs/demo/services/currency/)

+ - [Email](https://opentelemetry.io/docs/demo/services/email/)

+ - [Feature Flag](https://opentelemetry.io/docs/demo/services/feature-flag/)

+ - [Fraud Detection](https://opentelemetry.io/docs/demo/services/fraud-detection/)

+ - [Frontend](https://opentelemetry.io/docs/demo/services/frontend/)

+ - [Kafka](https://opentelemetry.io/docs/demo/services/kafka/)

+ - [Payment](https://opentelemetry.io/docs/demo/services/payment/)

+ - [Product Catalog](https://opentelemetry.io/docs/demo/services/product-catalog/)

+ - [Quote](https://opentelemetry.io/docs/demo/services/quote/)

+ - [Recommendation](https://opentelemetry.io/docs/demo/services/recommendation/)

+ - [Shipping](https://opentelemetry.io/docs/demo/services/shipping/)

+ - [Fluent-Bit](../src/fluent-bit/README.md) *(nginx's otel log exported)*

+ - [Integrations](../src/integrations/README.md) *(pre-canned OpenSearch assets)*

+ - [DataPrepper](../src/dataprepper/README.md) *(OpenSearch's ingestion pipeline)

+

+Backend supportive services

+ - [Load Generator](http://load-generator:8089)

+ - See [description](https://opentelemetry.io/docs/demo/services/load-generator/)

+ - [Frontend Nginx Proxy](http://nginx:90) *(replacement for _Frontend-Proxy_)*

+ - See [description](../src/nginx-otel/README.md)

+ - [OpenSearch](https://opensearch:9200)

+ - See [description](https://github.com/opensearch-project/opentelemetry-demo/blob/12d52cbb23bbf4226f6de2dfec840482a0a7d054/docker-compose.yml#L697)

+ - [Dashboards](http://opensearch-dashboards:5601)

+ - See [description](https://github.com/opensearch-project/opentelemetry-demo/blob/12d52cbb23bbf4226f6de2dfec840482a0a7d054/docker-compose.yml#L747)

+ - [Prometheus](http://prometheus:9090)

+ - See [description](https://github.com/opensearch-project/opentelemetry-demo/blob/12d52cbb23bbf4226f6de2dfec840482a0a7d054/docker-compose.yml#L674)

+ - [Feature-Flag](http://feature-flag-service:8881)

+ - See [description](../src/featureflagservice/README.md)

+ - [Grafana](http://grafana:3000)

+ - See [description](https://github.com/opensearch-project/opentelemetry-demo/blob/12d52cbb23bbf4226f6de2dfec840482a0a7d054/docker-compose.yml#L637)

+

+### Services Topology

+The next diagram shows the docker compose services dependencies

+

+

+---

+

+## Purpose

+The purpose of this demo is to demonstrate the different capabilities of OpenSearch Observability to investigate and reflect your system.

+

+### Ingestion

+The ingestion capabilities for OpenSearch is to be able to support multiple pipelines:

+ - [Data-Prepper](https://github.com/opensearch-project/data-prepper/) is an OpenSearch ingestion project that allows ingestion of OTEL standard signals using Otel-Collector

+ - [Jaeger](https://opensearch.org/docs/latest/observing-your-data/trace/trace-analytics-jaeger/) is an ingestion framework which has a build in capability for pushing OTEL signals into OpenSearch

+ - [Fluent-Bit](https://docs.fluentbit.io/manual/pipeline/outputs/opensearch) is an ingestion framework which has a build in capability for pushing OTEL signals into OpenSearch

+

+### Integrations -

+The integration service is a list of pre-canned assets that are loaded in a combined manner to allow users the ability for simple and automatic way to discover and review their services topology.

+

+These (demo-sample) integrations contain the following assets:

+ - components & index template mapping

+ - datasources

+ - data-stream & indices

+ - queries

+ - dashboards

+

+Once they are loaded, the user can imminently review his OTEL demo services and dashboards that reflect the system state.

+ - [Nginx Dashboard](../src/integrations/display/nginx-logs-dashboard-new.ndjson) - reflects the Nginx Proxy server that routes all the network communication to/from the frontend

+ - [Prometheus datasource](../src/integrations/datasource/prometheus.json) - reflects the connectivity to the prometheus metric storage that allows us to federate metrics analytics queries

+ - [Logs Datastream](../src/integrations/indices/data-stream.json) - reflects the data-stream used by nginx logs ingestion and dashboards representing a well-structured [log schema](../src/integrations/mapping-templates/logs.mapping)

+

+Once these assets are loaded - the user can start reviewing its Observability dashboards and traces

+

+

+

+

+

+

+

+

+

+

+

+

+---

+

+### **Scenarios**

+

+How can you solve problems with OpenTelemetry? These scenarios walk you through some pre-configured problems and show you how to interpret OpenTelemetry data to solve them.

+

+- Generate a Product Catalog error for GetProduct requests with product id: OLJCESPC7Z using the Feature Flag service

+- Discover a memory leak and diagnose it using metrics and traces. Read more

+

+### Prometheus Metrics

+

+Getting all metrics names call the following API

+`http://localhost:9090/api/v1/label/__name__/values`

+

+This will return the following response:

+```json5

+{

+ "status": "success",

+ "data": [

+ "app_ads_ad_requests_total",

+ "app_currency_counter_total",

+ "app_frontend_requests_total",

+ "app_payment_transactions_total",

+ "app_recommendations_counter_total",

+ "http_server_duration_milliseconds_bucket",

+ "http_server_duration_milliseconds_count",

+ "http_server_duration_milliseconds_sum",

+ "kafka_consumer_assigned_partitions",

+ "kafka_consumer_bytes_consumed_rate",

+ "kafka_consumer_bytes_consumed_total",

+ "kafka_consumer_commit_latency_avg",

+ "kafka_consumer_commit_latency_max",

+ "kafka_consumer_commit_rate",

+ "kafka_consumer_commit_sync_time_ns_total",

+ "kafka_consumer_commit_total",

+ "kafka_consumer_committed_time_ns_total",

+ "kafka_consumer_connection_close_rate",

+ "kafka_consumer_connection_close_total",

+ "kafka_consumer_connection_count",

+ "kafka_consumer_connection_creation_rate",

+ "kafka_consumer_connection_creation_total",

+ "kafka_consumer_failed_authentication_rate",

+ "kafka_consumer_failed_authentication_total",

+ "kafka_consumer_failed_reauthentication_rate",

+ "kafka_consumer_failed_reauthentication_total",

+ "kafka_consumer_failed_rebalance_rate_per_hour",

+ "kafka_consumer_failed_rebalance_total",

+ "kafka_consumer_fetch_latency_avg",

+ "kafka_consumer_fetch_latency_max",

+ "kafka_consumer_fetch_rate",

+ "kafka_consumer_fetch_size_avg",

+ "kafka_consumer_fetch_size_max",

+ "kafka_consumer_fetch_throttle_time_avg",

+ "kafka_consumer_fetch_throttle_time_max",

+ "kafka_consumer_fetch_total",

+ "kafka_consumer_heartbeat_rate",

+ "kafka_consumer_heartbeat_response_time_max",

+ "kafka_consumer_heartbeat_total",

+ "kafka_consumer_incoming_byte_rate",

+ "kafka_consumer_incoming_byte_total",

+ "kafka_consumer_io_ratio",

+ "kafka_consumer_io_time_ns_avg",

+ "kafka_consumer_io_time_ns_total",

+ "kafka_consumer_io_wait_ratio",

+ "kafka_consumer_io_wait_time_ns_avg",

+ "kafka_consumer_io_wait_time_ns_total",

+ "kafka_consumer_io_waittime_total",

+ "kafka_consumer_iotime_total",

+ "kafka_consumer_join_rate",

+ "kafka_consumer_join_time_avg",

+ "kafka_consumer_join_time_max",

+ "kafka_consumer_join_total",

+ "kafka_consumer_last_heartbeat_seconds_ago",

+ "kafka_consumer_last_poll_seconds_ago",

+ "kafka_consumer_last_rebalance_seconds_ago",

+ "kafka_consumer_network_io_rate",

+ "kafka_consumer_network_io_total",

+ "kafka_consumer_outgoing_byte_rate",

+ "kafka_consumer_outgoing_byte_total",

+ "kafka_consumer_partition_assigned_latency_avg",

+ "kafka_consumer_partition_assigned_latency_max",

+ "kafka_consumer_partition_lost_latency_avg",

+ "kafka_consumer_partition_lost_latency_max",

+ "kafka_consumer_partition_revoked_latency_avg",

+ "kafka_consumer_partition_revoked_latency_max",

+ "kafka_consumer_poll_idle_ratio_avg",

+ "kafka_consumer_reauthentication_latency_avg",

+ "kafka_consumer_reauthentication_latency_max",

+ "kafka_consumer_rebalance_latency_avg",

+ "kafka_consumer_rebalance_latency_max",

+ "kafka_consumer_rebalance_latency_total",

+ "kafka_consumer_rebalance_rate_per_hour",

+ "kafka_consumer_rebalance_total",

+ "kafka_consumer_records_consumed_rate",

+ "kafka_consumer_records_consumed_total",

+ "kafka_consumer_records_lag",

+ "kafka_consumer_records_lag_avg",

+ "kafka_consumer_records_lag_max",

+ "kafka_consumer_records_lead",

+ "kafka_consumer_records_lead_avg",

+ "kafka_consumer_records_lead_min",

+ "kafka_consumer_records_per_request_avg",

+ "kafka_consumer_request_latency_avg",

+ "kafka_consumer_request_latency_max",

+ "kafka_consumer_request_rate",

+ "kafka_consumer_request_size_avg",

+ "kafka_consumer_request_size_max",

+ "kafka_consumer_request_total",

+ "kafka_consumer_response_rate",

+ "kafka_consumer_response_total",

+ "kafka_consumer_select_rate",

+ "kafka_consumer_select_total",

+ "kafka_consumer_successful_authentication_no_reauth_total",

+ "kafka_consumer_successful_authentication_rate",

+ "kafka_consumer_successful_authentication_total",

+ "kafka_consumer_successful_reauthentication_rate",

+ "kafka_consumer_successful_reauthentication_total",

+ "kafka_consumer_sync_rate",

+ "kafka_consumer_sync_time_avg",

+ "kafka_consumer_sync_time_max",

+ "kafka_consumer_sync_total",

+ "kafka_consumer_time_between_poll_avg",

+ "kafka_consumer_time_between_poll_max",

+ "kafka_controller_active_count",

+ "kafka_isr_operation_count",

+ "kafka_lag_max",

+ "kafka_logs_flush_Count_milliseconds_total",

+ "kafka_logs_flush_time_50p_milliseconds",

+ "kafka_logs_flush_time_99p_milliseconds",

+ "kafka_message_count_total",

+ "kafka_network_io_bytes_total",

+ "kafka_partition_count",

+ "kafka_partition_offline",

+ "kafka_partition_underReplicated",

+ "kafka_purgatory_size",

+ "kafka_request_count_total",

+ "kafka_request_failed_total",

+ "kafka_request_queue",

+ "kafka_request_time_50p_milliseconds",

+ "kafka_request_time_99p_milliseconds",

+ "kafka_request_time_milliseconds_total",

+ "otel_logs_log_processor_logs",

+ "otel_logs_log_processor_queue_limit",

+ "otel_logs_log_processor_queue_usage",

+ "otel_trace_span_processor_queue_limit",

+ "otel_trace_span_processor_queue_usage",

+ "otel_trace_span_processor_spans",

+ "otelcol_exporter_enqueue_failed_log_records",

+ "otelcol_exporter_enqueue_failed_metric_points",

+ "otelcol_exporter_enqueue_failed_spans",

+ "otelcol_exporter_queue_capacity",

+ "otelcol_exporter_queue_size",

+ "otelcol_exporter_sent_log_records",

+ "otelcol_exporter_sent_metric_points",

+ "otelcol_exporter_sent_spans",

+ "otelcol_process_cpu_seconds",

+ "otelcol_process_memory_rss",

+ "otelcol_process_runtime_heap_alloc_bytes",

+ "otelcol_process_runtime_total_alloc_bytes",

+ "otelcol_process_runtime_total_sys_memory_bytes",

+ "otelcol_process_uptime",

+ "otelcol_processor_accepted_log_records",

+ "otelcol_processor_accepted_metric_points",

+ "otelcol_processor_accepted_spans",

+ "otelcol_processor_batch_batch_send_size_bucket",

+ "otelcol_processor_batch_batch_send_size_count",

+ "otelcol_processor_batch_batch_send_size_sum",

+ "otelcol_processor_batch_timeout_trigger_send",

+ "otelcol_processor_dropped_log_records",

+ "otelcol_processor_dropped_metric_points",

+ "otelcol_processor_dropped_spans",

+ "otelcol_processor_refused_log_records",

+ "otelcol_processor_refused_metric_points",

+ "otelcol_processor_refused_spans",

+ "otelcol_processor_servicegraph_expired_edges",

+ "otelcol_processor_servicegraph_total_edges",

+ "otelcol_receiver_accepted_log_records",

+ "otelcol_receiver_accepted_metric_points",

+ "otelcol_receiver_accepted_spans",

+ "otelcol_receiver_refused_log_records",

+ "otelcol_receiver_refused_metric_points",

+ "otelcol_receiver_refused_spans",

+ "otlp_exporter_exported_total",

+ "otlp_exporter_seen_total",

+ "process_runtime_dotnet_assemblies_count",

+ "process_runtime_dotnet_exceptions_count_total",

+ "process_runtime_dotnet_gc_allocations_size_bytes_total",

+ "process_runtime_dotnet_gc_collections_count_total",

+ "process_runtime_dotnet_gc_committed_memory_size_bytes",

+ "process_runtime_dotnet_gc_heap_size_bytes",

+ "process_runtime_dotnet_gc_objects_size_bytes",

+ "process_runtime_dotnet_jit_compilation_time_nanoseconds_total",

+ "process_runtime_dotnet_jit_il_compiled_size_bytes_total",

+ "process_runtime_dotnet_jit_methods_compiled_count_total",

+ "process_runtime_dotnet_monitor_lock_contention_count_total",

+ "process_runtime_dotnet_thread_pool_completed_items_count_total",

+ "process_runtime_dotnet_thread_pool_queue_length",

+ "process_runtime_dotnet_thread_pool_threads_count",

+ "process_runtime_dotnet_timer_count",

+ "process_runtime_go_cgo_calls",

+ "process_runtime_go_gc_count_total",

+ "process_runtime_go_gc_pause_ns_bucket",

+ "process_runtime_go_gc_pause_ns_count",

+ "process_runtime_go_gc_pause_ns_sum",

+ "process_runtime_go_gc_pause_ns_total",

+ "process_runtime_go_goroutines",

+ "process_runtime_go_mem_heap_alloc_bytes",

+ "process_runtime_go_mem_heap_idle_bytes",

+ "process_runtime_go_mem_heap_inuse_bytes",

+ "process_runtime_go_mem_heap_objects",

+ "process_runtime_go_mem_heap_released_bytes",

+ "process_runtime_go_mem_heap_sys_bytes",

+ "process_runtime_go_mem_live_objects",

+ "process_runtime_go_mem_lookups_total",

+ "process_runtime_jvm_buffer_count",

+ "process_runtime_jvm_buffer_limit_bytes",

+ "process_runtime_jvm_buffer_usage_bytes",

+ "process_runtime_jvm_classes_current_loaded",

+ "process_runtime_jvm_classes_loaded_total",

+ "process_runtime_jvm_classes_unloaded_total",

+ "process_runtime_jvm_cpu_utilization_ratio",

+ "process_runtime_jvm_gc_duration_milliseconds_bucket",

+ "process_runtime_jvm_gc_duration_milliseconds_count",

+ "process_runtime_jvm_gc_duration_milliseconds_sum",

+ "process_runtime_jvm_memory_committed_bytes",

+ "process_runtime_jvm_memory_init_bytes",

+ "process_runtime_jvm_memory_limit_bytes",

+ "process_runtime_jvm_memory_usage_after_last_gc_bytes",

+ "process_runtime_jvm_memory_usage_bytes",

+ "process_runtime_jvm_system_cpu_load_1m_ratio",

+ "process_runtime_jvm_system_cpu_utilization_ratio",

+ "process_runtime_jvm_threads_count",

+ "processedLogs_total",

+ "processedSpans_total",

+ "rpc_client_duration_milliseconds_bucket",

+ "rpc_client_duration_milliseconds_count",

+ "rpc_client_duration_milliseconds_sum",

+ "rpc_server_duration_milliseconds_bucket",

+ "rpc_server_duration_milliseconds_count",

+ "rpc_server_duration_milliseconds_sum",

+ "runtime_cpython_cpu_time_seconds_total",

+ "runtime_cpython_gc_count_bytes_total",

+ "runtime_cpython_memory_bytes_total",

+ "runtime_uptime_milliseconds",

+ "scrape_duration_seconds",

+ "scrape_samples_post_metric_relabeling",

+ "scrape_samples_scraped",

+ "scrape_series_added",

+ "span_metrics_calls_total",

+ "span_metrics_duration_milliseconds_bucket",

+ "span_metrics_duration_milliseconds_count",

+ "span_metrics_duration_milliseconds_sum",

+ "system_cpu_time_seconds_total",

+ "system_cpu_utilization_ratio",

+ "system_disk_io_bytes_total",

+ "system_disk_operations_total",

+ "system_disk_time_seconds_total",

+ "system_memory_usage_bytes",

+ "system_memory_utilization_ratio",

+ "system_network_connections",

+ "system_network_dropped_packets_total",

+ "system_network_errors_total",

+ "system_network_io_bytes_total",

+ "system_network_packets_total",

+ "system_swap_usage_pages",

+ "system_swap_utilization_ratio",

+ "system_thread_count",

+ "target_info",

+ "up"

+ ]

+}

+```

+

+### **Reference**

+Project reference documentation, like requirements and feature matrices [here](https://opentelemetry.io/docs/demo/#reference)

+

diff --git a/.github/img/DemoFlow.png b/.github/img/DemoFlow.png

new file mode 100644

index 0000000000..ddd45b41b6

Binary files /dev/null and b/.github/img/DemoFlow.png differ

diff --git a/.github/img/docker-services-topology.png b/.github/img/docker-services-topology.png

new file mode 100644

index 0000000000..8e3db8fca9

Binary files /dev/null and b/.github/img/docker-services-topology.png differ

diff --git a/.github/img/nginx_dashboard.png b/.github/img/nginx_dashboard.png

new file mode 100644

index 0000000000..e88c1b2094

Binary files /dev/null and b/.github/img/nginx_dashboard.png differ

diff --git a/.github/img/otelcol-data-flow-overview.png b/.github/img/otelcol-data-flow-overview.png

new file mode 100644

index 0000000000..e8359dcbdc

Binary files /dev/null and b/.github/img/otelcol-data-flow-overview.png differ

diff --git a/.github/img/prometheus_federated_metrics.png b/.github/img/prometheus_federated_metrics.png

new file mode 100644

index 0000000000..145e232ae0

Binary files /dev/null and b/.github/img/prometheus_federated_metrics.png differ

diff --git a/.github/img/service-graph.png b/.github/img/service-graph.png

new file mode 100644

index 0000000000..028c0aafa5

Binary files /dev/null and b/.github/img/service-graph.png differ

diff --git a/.github/img/services.png b/.github/img/services.png

new file mode 100644

index 0000000000..753e298a87

Binary files /dev/null and b/.github/img/services.png differ

diff --git a/.github/img/trace_analytics.png b/.github/img/trace_analytics.png

new file mode 100644

index 0000000000..9357a2b0fb

Binary files /dev/null and b/.github/img/trace_analytics.png differ

diff --git a/.github/img/traces.png b/.github/img/traces.png

new file mode 100644

index 0000000000..d4549bab84

Binary files /dev/null and b/.github/img/traces.png differ

diff --git a/.github/workflows/add-untriaged.yml b/.github/workflows/add-untriaged.yml

new file mode 100644

index 0000000000..c6a64fd8af

--- /dev/null

+++ b/.github/workflows/add-untriaged.yml

@@ -0,0 +1,19 @@

+name: Apply 'untriaged' label during issue lifecycle

+

+on:

+ issues:

+ types: [opened, reopened, transferred]

+

+jobs:

+ apply-label:

+ runs-on: ubuntu-latest

+ steps:

+ - uses: actions/github-script@v6

+ with:

+ script: |

+ github.rest.issues.addLabels({

+ issue_number: context.issue.number,

+ owner: context.repo.owner,

+ repo: context.repo.repo,

+ labels: ['untriaged']

+ })

\ No newline at end of file

diff --git a/.whitesource b/.whitesource

new file mode 100644

index 0000000000..d465069a27

--- /dev/null

+++ b/.whitesource

@@ -0,0 +1,33 @@

+{

+ "scanSettings": {

+ "configMode": "AUTO",

+ "configExternalURL": "",

+ "projectToken": "",

+ "baseBranches": []

+ },

+ "scanSettingsSAST": {

+ "enableScan": false,

+ "scanPullRequests": false,

+ "incrementalScan": true,

+ "baseBranches": [],

+ "snippetSize": 10

+ },

+ "checkRunSettings": {

+ "vulnerableCheckRunConclusionLevel": "failure",

+ "displayMode": "diff",

+ "useMendCheckNames": true

+ },

+ "checkRunSettingsSAST": {

+ "checkRunConclusionLevel": "failure",

+ "severityThreshold": "high"

+ },

+ "issueSettings": {

+ "minSeverityLevel": "LOW",

+ "issueType": "DEPENDENCY"

+ },

+ "remediateSettings": {

+ "workflowRules": {

+ "enabled": true

+ }

+ }

+}

\ No newline at end of file

diff --git a/ADMINS.md b/ADMINS.md

new file mode 100644

index 0000000000..81f320c5c0

--- /dev/null

+++ b/ADMINS.md

@@ -0,0 +1,7 @@

+## Admins

+

+| Admin | GitHub ID | Affiliation |

+| --------------- | --------------------------------------- | ----------- |

+| Henri Yandell | [hyandell](https://github.com/hyandell) | Amazon |

+

+[This document](https://github.com/opensearch-project/.github/blob/main/ADMINS.md) explains what admins do in this repo. and how they should be doing it. If you're interested in becoming a maintainer, see [MAINTAINERS](MAINTAINERS.md). If you're interested in contributing, see [CONTRIBUTING](CONTRIBUTING.md).

diff --git a/CODE_OF_CONDUCT.md b/CODE_OF_CONDUCT.md

new file mode 100644

index 0000000000..997bae660b

--- /dev/null

+++ b/CODE_OF_CONDUCT.md

@@ -0,0 +1,25 @@

+

+This code of conduct applies to all spaces provided by the OpenSource project including in code, documentation, issue trackers, mailing lists, chat channels, wikis, blogs, social media and any other communication channels used by the project.

+

+

+**Our open source communities endeavor to:**

+

+* Be Inclusive: We are committed to being a community where everyone can join and contribute. This means using inclusive and welcoming language.

+* Be Welcoming: We are committed to maintaining a safe space for everyone to be able to contribute.

+* Be Respectful: We are committed to encouraging differing viewpoints, accepting constructive criticism and work collaboratively towards decisions that help the project grow. Disrespectful and unacceptable behavior will not be tolerated.

+* Be Collaborative: We are committed to supporting what is best for our community and users. When we build anything for the benefit of the project, we should document the work we do and communicate to others on how this affects their work.

+

+

+**Our Responsibility. As contributors, members, or bystanders we each individually have the responsibility to behave professionally and respectfully at all times. Disrespectful and unacceptable behaviors include, but are not limited to:**

+

+* The use of violent threats, abusive, discriminatory, or derogatory language;

+* Offensive comments related to gender, gender identity and expression, sexual orientation, disability, mental illness, race, political or religious affiliation;

+* Posting of sexually explicit or violent content;

+* The use of sexualized language and unwelcome sexual attention or advances;

+* Public or private harassment of any kind;

+* Publishing private information, such as physical or electronic address, without permission;

+* Other conduct which could reasonably be considered inappropriate in a professional setting;

+* Advocating for or encouraging any of the above behaviors.

+* Enforcement and Reporting Code of Conduct Issues:

+

+Instances of abusive, harassing, or otherwise unacceptable behavior may be reported. [Contact us](mailto:opensource-codeofconduct@amazon.com). All complaints will be reviewed and investigated and will result in a response that is deemed necessary and appropriate to the circumstances.

diff --git a/CONTRIBUTING.md b/CONTRIBUTING.md

index 1d50f4a6da..3ae12e54f2 100644

--- a/CONTRIBUTING.md

+++ b/CONTRIBUTING.md

@@ -1,5 +1,8 @@

# Contributing

+OpenSearch is a community project that is built and maintained by people just like **you**.

+[This document](https://github.com/opensearch-project/.github/blob/main/CONTRIBUTING.md) explains how you can contribute to this and related projects.

+

Welcome to OpenTelemetry Demo Webstore repository!

Before you start - see OpenTelemetry general

diff --git a/DEVELOPER_GUIDE.md b/DEVELOPER_GUIDE.md

new file mode 100644

index 0000000000..5da22b1598

--- /dev/null

+++ b/DEVELOPER_GUIDE.md

@@ -0,0 +1,74 @@

+## Developer Guide

+

+So you want to contribute code to this project? Excellent! We're glad you're here. Here's what you need to do.

+

+## Development Environment

+

+You can contribute to this project from a Windows, macOS or Linux machine. The

+first step to contributing is ensuring you can run the demo successfully from

+your local machine.

+

+On all platforms, the minimum requirements are:

+

+- Docker

+- [Docker Compose](https://docs.docker.com/compose/install/#install-compose) v2.0.0+

+

+### Clone Repo

+

+- Clone the Webstore Demo repository:

+

+```shell

+git clone https://github.com/open-telemetry/opentelemetry-demo.git

+```

+

+### Open Folder

+

+- Navigate to the cloned folder:

+

+```shell

+cd opentelemetry-demo/

+```

+

+### Gradle Update [Windows Only]

+

+- Navigate to the Java Ad Service folder to install and update Gradle:

+

+```shell

+cd .\src\adservice\

+.\gradlew installDist

+.\gradlew wrapper --gradle-version 7.4.2

+```

+

+### Run Docker Compose

+

+- Start the demo (It can take ~20min the first time the command is executed as

+ all the images will be build):

+

+```shell

+docker compose up -d

+```

+

+### Verify the Webstore & the Telemetry

+

+Once the images are built and containers are started you can access:

+

+- Webstore-Proxy (Via Nginx Proxy): http://nginx:90/ (`nginx` DNS name needs to be added )

+ - [Defined here](https://github.com/opensearch-project/opentelemetry-demo/blob/079750428f1bddf16c029f30f478396e45559fec/.env#L58)

+- Webstore: http://frontend:8080/ (`frontend` DNS name needs to be added )

+ - [Defined here](https://github.com/opensearch-project/opentelemetry-demo/blob/079750428f1bddf16c029f30f478396e45559fec/.env#L63)

+- Dashboards: http://dashboards:5061/ (`dashboards` DNS name needs to be added )

+- Feature Flags UI: http://featureflag:8881/ (`featureflag` DNS name needs to be added )

+ - [Defined here](https://github.com/opensearch-project/opentelemetry-demo/blob/079750428f1bddf16c029f30f478396e45559fec/.env#LL47C31-L47C31)

+- Load Generator UI: http://loadgenerator:8089/ (`loadgenerator` DNS name needs to be added)

+ - [Defined here](https://github.com/opensearch-project/opentelemetry-demo/blob/079750428f1bddf16c029f30f478396e45559fec/.env#L66)

+

+OpenSearch has [documented](https://opensearch.org/docs/latest/observing-your-data/trace/trace-analytics-jaeger/#setting-up-opensearch-to-use-jaeger-data) the usage of the Observability plugin with jaeger as a trace signal source.

+

+

+### Review the Documentation

+

+The Demo team is committed to keeping the demo up to date. That means the

+documentation as well as the code. When making changes to any service or feature

+remember to find the related docs and update those as well. Most (but not all)

+documentation can be found on the OTel website under [Demo docs][docs].

+

diff --git a/MAINTAINERS.md b/MAINTAINERS.md

new file mode 100644

index 0000000000..ad5aad7c0c

--- /dev/null

+++ b/MAINTAINERS.md

@@ -0,0 +1,34 @@

+## Overview

+

+This document contains a list of maintainers in this repo. See [opensearch-project/.github/RESPONSIBILITIES.md](https://github.com/opensearch-project/.github/blob/main/RESPONSIBILITIES.md#maintainer-responsibilities) that explains what the role of maintainer means, what maintainers do in this and other repos, and how they should be doing it. If you're interested in contributing, and becoming a maintainer, see [CONTRIBUTING](CONTRIBUTING.md).

+

+## Current Maintainers

+

+| Maintainer | GitHub ID | Affiliation |

+| ----------------- | ------------------------------------------------- | ----------- |

+| Eric Wei | [mengweieric](https://github.com/mengweieric) | Amazon |

+| Joshua Li | [joshuali925](https://github.com/joshuali925) | Amazon |

+| Shenoy Pratik | [ps48](https://github.com/ps48) | Amazon |

+| Kavitha Mohan | [kavithacm](https://github.com/kavithacm) | Amazon |

+| Rupal Mahajan | [rupal-bq](https://github.com/rupal-bq) | Amazon |

+| Derek Ho | [derek-ho](https://github.com/derek-ho) | Amazon |

+| Lior Perry | [YANG-DB](https://github.com/YANG-DB) | Amazon |

+| Peter Fitzgibbons | [pjfitzgibbons](https://github.com/pjfitzgibbons) | Amazon |

+| Simeon Widdis | [swiddis](https://github.com/swiddis) | Amazon |

+| Chen Dai | [dai-chen](https://github.com/dai-chen) | Amazon |

+| Vamsi Manohar | [vamsi-amazon](https://github.com/vamsi-amazon) | Amazon |

+| Peng Huo | [penghuo](https://github.com/penghuo) | Amazon |

+| Sean Kao | [seankao-az](https://github.com/seankao-az) | Amazon |

+

+

+## Emeritus Maintainers

+

+| Maintainer | GitHub ID | Affiliation |

+| ----------------- | ------------------------------------------------------- | ----------- |

+| Charlotte Henkle | [CEHENKLE](https://github.com/CEHENKLE) | Amazon |

+| Anirudha Jadhav | [anirudha](https://github.com/anirudha) | Amazon |

+| Nick Knize | [nknize](https://github.com/nknize) | Amazon |

+| David Cui | [davidcui1225](https://github.com/davidcui1225) | Amazon |

+| Eugene Lee | [eugenesk24](https://github.com/eugenesk24) | Amazon |

+| Zhongnan Su | [zhongnansu](https://github.com/zhongnansu) | Amazon |

+| Sean Li | [sejli](https://github.com/sejli) | Amazon |

diff --git a/NOTICE b/NOTICE

new file mode 100644

index 0000000000..731cb60065

--- /dev/null

+++ b/NOTICE

@@ -0,0 +1,2 @@

+OpenSearch (https://opensearch.org/)

+Copyright OpenSearch Contributors

diff --git a/RELEASING.md b/RELEASING.md

new file mode 100644

index 0000000000..91263ac47d

--- /dev/null

+++ b/RELEASING.md

@@ -0,0 +1 @@

+This project follows the [OpenSearch release process](https://github.com/opensearch-project/.github/blob/main/RELEASING.md).

diff --git a/SECURITY.md b/SECURITY.md

new file mode 100644

index 0000000000..b862921043

--- /dev/null

+++ b/SECURITY.md

@@ -0,0 +1,3 @@

+## Reporting a Vulnerability

+

+If you discover a potential security issue in this project we ask that you notify AWS/Amazon Security via our [vulnerability reporting page](http://aws.amazon.com/security/vulnerability-reporting/) or directly via email to aws-security@amazon.com. Please do **not** create a public GitHub issue.

diff --git a/add_hosts_locally.sh b/add_hosts_locally.sh

new file mode 100644

index 0000000000..a90eef3822

--- /dev/null

+++ b/add_hosts_locally.sh

@@ -0,0 +1,31 @@

+# Copyright The OpenTelemetry Authors

+# SPDX-License-Identifier: Apache-2.0

+

+#!/bin/bash

+# Local host that are used to help developing and debugging the OTEL demo locally

+

+# The IP address you want to associate with the hostname

+# IP="0.0.0.0"

+IP="127.0.0.1"

+

+# The hostname you want to associate with the IP address

+OPENSEARCH_HOST="opensearch"

+OPENSEARCH_DASHBOARD="opensearch-dashboards"

+OTEL_STORE="frontend"

+FEATURE_FLAG_SERVICE="feature-flag-service"

+NGINX="nginx"

+OTEL_LOADER="loadgenerator"

+PROMETHEUS="prometheus"

+LOAD_GENERATOR="load-generator"

+GRAFANA="grafana"

+

+# Add the entry to the /etc/hosts file

+echo "$IP $OPENSEARCH_HOST" | sudo tee -a /etc/hosts

+echo "$IP $OPENSEARCH_DASHBOARD" | sudo tee -a /etc/hosts

+echo "$IP $OTEL_STORE" | sudo tee -a /etc/hosts

+echo "$IP $PROMETHEUS" | sudo tee -a /etc/hosts

+echo "$IP $OTEL_LOADER" | sudo tee -a /etc/hosts

+echo "$IP $NGINX" | sudo tee -a /etc/hosts

+echo "$IP $LOAD_GENERATOR" | sudo tee -a /etc/hosts

+echo "$IP $FEATURE_FLAG_SERVICE" | sudo tee -a /etc/hosts

+echo "$IP $GRAFANA" | sudo tee -a /etc/hosts

diff --git a/docker-compose.minimal.yml b/docker-compose.minimal.yml

index 69a5f48009..ace5c4d674 100644

--- a/docker-compose.minimal.yml

+++ b/docker-compose.minimal.yml

@@ -515,7 +515,9 @@ services:

- ./src/otelcollector/otelcol-config-extras.yml:/etc/otelcol-config-extras.yml

ports:

- "4317" # OTLP over gRPC receiver

- - "4318" # OTLP over HTTP receiver

+ - "4318:4318" # OTLP over HTTP receiver

+ - "9464" # Prometheus exporter

+ - "8888" # metrics endpoint

depends_on:

- jaeger

logging: *logging

diff --git a/docker-compose.yml b/docker-compose.yml

index b191d05a9c..0a747c269b 100644

--- a/docker-compose.yml

+++ b/docker-compose.yml

@@ -8,6 +8,9 @@ x-default-logging: &logging

max-size: "5m"

max-file: "2"

+volumes:

+ opensearch-data:

+

networks:

default:

name: opentelemetry-demo

@@ -219,8 +222,8 @@ services:

memory: 175M

restart: unless-stopped

ports:

- - "${FEATURE_FLAG_SERVICE_PORT}" # Feature Flag Service UI

- - "${FEATURE_FLAG_GRPC_SERVICE_PORT}" # Feature Flag Service gRPC API

+ - "${FEATURE_FLAG_SERVICE_PORT}:${FEATURE_FLAG_SERVICE_PORT}" # Feature Flag Service UI

+ - "${FEATURE_FLAG_GRPC_SERVICE_PORT}" # Feature Flag Service gRPC API

environment:

- FEATURE_FLAG_SERVICE_PORT

- FEATURE_FLAG_GRPC_SERVICE_PORT

@@ -277,7 +280,7 @@ services:

memory: 200M

restart: unless-stopped

ports:

- - "${FRONTEND_PORT}"

+ - "${FRONTEND_PORT}:${FRONTEND_PORT}"

environment:

- PORT=${FRONTEND_PORT}

- FRONTEND_ADDR

@@ -316,47 +319,6 @@ services:

condition: service_started

logging: *logging

- # Frontend Proxy (Envoy)

- frontendproxy:

- image: ${IMAGE_NAME}:${IMAGE_VERSION}-frontendproxy

- container_name: frontend-proxy

- build:

- context: ./

- dockerfile: src/frontendproxy/Dockerfile

- deploy:

- resources:

- limits:

- memory: 50M

- ports:

- - "${ENVOY_PORT}:${ENVOY_PORT}"

- - 10000:10000

- environment:

- - FRONTEND_PORT

- - FRONTEND_HOST

- - FEATURE_FLAG_SERVICE_PORT

- - FEATURE_FLAG_SERVICE_HOST

- - LOCUST_WEB_HOST

- - LOCUST_WEB_PORT

- - GRAFANA_SERVICE_PORT

- - GRAFANA_SERVICE_HOST

- - JAEGER_SERVICE_PORT

- - JAEGER_SERVICE_HOST

- - OTEL_COLLECTOR_HOST

- - OTEL_COLLECTOR_PORT_GRPC

- - OTEL_COLLECTOR_PORT_HTTP

- - ENVOY_PORT

- depends_on:

- frontend:

- condition: service_started

- featureflagservice:

- condition: service_started

- loadgenerator:

- condition: service_started

- jaeger:

- condition: service_started

- grafana:

- condition: service_started

-

# Load Generator

loadgenerator:

image: ${IMAGE_NAME}:${IMAGE_VERSION}-loadgenerator

@@ -372,7 +334,7 @@ services:

memory: 120M

restart: unless-stopped

ports:

- - "${LOCUST_WEB_PORT}"

+ - "${LOCUST_WEB_PORT}:${LOCUST_WEB_PORT}"

environment:

- LOCUST_WEB_PORT

- LOCUST_USERS

@@ -461,6 +423,7 @@ services:

ports:

- "${QUOTE_SERVICE_PORT}"

environment:

+ - FEATURE_FLAG_GRPC_SERVICE_ADDR

- OTEL_EXPORTER_OTLP_ENDPOINT=http://${OTEL_COLLECTOR_HOST}:4318

- OTEL_PHP_AUTOLOAD_ENABLED=true

- QUOTE_SERVICE_PORT

@@ -507,6 +470,39 @@ services:

condition: service_started

logging: *logging

+ # Frontend Nginx Proxy service

+ nginx:

+ image: nginx:latest

+ container_name: nginx

+ volumes:

+ - ./src/nginx-otel/default.conf:/etc/nginx/conf.d/default.conf

+ ports:

+ - 90:90

+ depends_on:

+ - frontend

+ - fluentbit

+ - otelcol

+ - loadgenerator

+ links:

+ - fluentbit

+ logging:

+ driver: "fluentd"

+ options:

+ fluentd-address: 127.0.0.1:24224

+ tag: nginx.access

+

+ # Fluent-bit logs shipper service

+ fluentbit:

+ container_name: fluentbit

+ image: fluent/fluent-bit:latest

+ volumes:

+ - ./src/fluent-bit:/fluent-bit/etc

+ ports:

+ - "24224:24224"

+ - "24224:24224/udp"

+ depends_on:

+ - opensearch

+

# Shipping service

shippingservice:

image: ${IMAGE_NAME}:${IMAGE_VERSION}-shippingservice

@@ -607,25 +603,80 @@ services:

# ********************

# Jaeger

jaeger:

- image: jaegertracing/all-in-one:1.51

+ image: jaegertracing/jaeger-collector:latest

container_name: jaeger

command:

- - "--memory.max-traces=8000"

- - "--query.base-path=/jaeger/ui"

- - "--prometheus.server-url=http://${PROMETHEUS_ADDR}"

- - "--prometheus.query.normalize-calls=true"

- - "--prometheus.query.normalize-duration=true"

+ - "--metrics-backend=prometheus"

+ - "--es.server-urls=https://opensearch:9200"

+ - "--es.tls.enabled=true"

deploy:

resources:

limits:

memory: 300M

restart: unless-stopped

ports:

- - "${JAEGER_SERVICE_PORT}" # Jaeger UI

- - "4317" # OTLP gRPC default port

+ - "${JAEGER_SERVICE_PORT}" # Jaeger UI

+ - "4317" # OTLP gRPC default port

+ - "14269:14269"

+ - "14268:14268"

+ - "14267:14267"

+ - "14250:14250"

+ - "9411:9411"

environment:

+ - COLLECTOR_OTLP_ENABLED=true

- METRICS_STORAGE_TYPE=prometheus

+ - SPAN_STORAGE_TYPE=opensearch

+ - ES_TAGS_AS_FIELDS_ALL=true

+ - ES_USERNAME=admin

+ - ES_PASSWORD=admin

+ - ES_TLS_SKIP_HOST_VERIFY=true

logging: *logging

+ depends_on:

+ - opensearch

+

+ jaeger-query:

+ image: jaegertracing/jaeger-query:latest

+ container_name: jaeger-query

+ command:

+ - "--metrics-backend=prometheus"

+ - "--es.server-urls=https://opensearch-node1:9200"

+ - "--es.tls.enabled=true"

+ deploy:

+ resources:

+ limits:

+ memory: 300M

+ restart: unless-stopped

+ ports:

+ - 16685

+ environment:

+ - COLLECTOR_OTLP_ENABLED=true

+ - METRICS_STORAGE_TYPE=prometheus

+ - SPAN_STORAGE_TYPE=opensearch

+ - ES_TAGS_AS_FIELDS_ALL=true

+ - ES_USERNAME=admin

+ - ES_PASSWORD=admin

+ - ES_TLS_SKIP_HOST_VERIFY=true

+ depends_on:

+ - opensearch-node1

+ - opensearch-node2

+ logging: *logging

+

+ jaeger-agent:

+ image: jaegertracing/jaeger-agent:latest

+ container_name: jaeger-agent

+ hostname: jaeger-agent

+ command: ["--reporter.grpc.host-port=jaeger:14250"]

+ ports:

+ - "${GRAFANA_SERVICE_PORT}"

+ - "5775:5775/udp"

+ - "6831:6831/udp"

+ - "6832:6832/udp"

+ - "5778:5778"

+ restart: on-failure

+ environment:

+ - SPAN_STORAGE_TYPE=opensearch

+ depends_on:

+ - jaeger

# Grafana

grafana:

@@ -641,7 +692,7 @@ services:

- ./src/grafana/grafana.ini:/etc/grafana/grafana.ini

- ./src/grafana/provisioning/:/etc/grafana/provisioning/

ports:

- - "${GRAFANA_SERVICE_PORT}"

+ - "${GRAFANA_SERVICE_PORT}:${GRAFANA_SERVICE_PORT}"

logging: *logging

# OpenTelemetry Collector

@@ -658,10 +709,15 @@ services:

- ./src/otelcollector/otelcol-config.yml:/etc/otelcol-config.yml

- ./src/otelcollector/otelcol-config-extras.yml:/etc/otelcol-config-extras.yml

ports:

- - "4317" # OTLP over gRPC receiver

- - "4318" # OTLP over HTTP receiver

+ - "4317" # OTLP over gRPC receiver

+ - "4318:4318" # OTLP over HTTP receiver

+ - "13133:13133" # health check port

+ - "9464" # Prometheus exporter

+ - "8888" # metrics endpoint

depends_on:

- - jaeger

+ - jaeger-agent

+ - jaeger-query

+ - data-prepper

logging: *logging

environment:

- ENVOY_PORT

@@ -690,37 +746,62 @@ services:

- "${PROMETHEUS_SERVICE_PORT}:${PROMETHEUS_SERVICE_PORT}"

logging: *logging

+ # OpenSearch store - engine

opensearch:

- image: opensearchproject/opensearch:latest

+ image: opensearchstaging/opensearch:${OPENSEARCH_VERSION}

container_name: opensearch

environment:

- - cluster.name=demo-cluster

- - node.name=demo-node

+ - cluster.name=opensearch-cluster

+ - node.name=opensearch

+ - discovery.seed_hosts=opensearch

+ - cluster.initial_cluster_manager_nodes=opensearch

- bootstrap.memory_lock=true

- - discovery.type=single-node

- "OPENSEARCH_JAVA_OPTS=-Xms512m -Xmx512m"

- - "DISABLE_INSTALL_DEMO_CONFIG=true"

- - "DISABLE_SECURITY_PLUGIN=true"

ulimits:

memlock:

soft: -1

hard: -1

nofile:

- soft: 65536

+ soft: 65536 # Maximum number of open files for the opensearch user - set to at least 65536

hard: 65536

+ volumes:

+ - opensearch-data:/usr/share/opensearch/data # Creates volume called opensearch-data1 and mounts it to the container

ports:

- - "9200:9200"

+ - 9200:9200

+ - 9600:9600

logging: *logging

+ expose:

+ - "9200"

+ healthcheck:

+ test: ["CMD", "curl", "-f", "https://localhost:9200/_cluster/health?wait_for_status=yellow"]

+ interval: 20s

+ timeout: 10s

+ retries: 10

- dataprepper:

+ # OpenSearch store - dashboard

+ data-prepper:

image: opensearchproject/data-prepper:latest

volumes:

- - ./src/opensearch/pipelines.yaml:/usr/share/data-prepper/pipelines/pipelines.yaml

- - ./src/opensearch/data-prepper-config.yaml:/usr/share/data-prepper/config/data-prepper-config.yaml

+ - ./src/dataprepper/pipelines.yaml:/usr/share/data-prepper/pipelines/pipelines.yaml

+ - ./src/dataprepper/data-prepper-config.yaml:/usr/share/data-prepper/config/data-prepper-config.yaml

ports:

- "21892"

logging: *logging

+ # OpenSearch store - dashboard

+ opensearch-dashboards:

+ image: opensearchproject/opensearch-dashboards:${OPENSEARCH_VERSION} # Make sure the version of opensearch-dashboards matches the version of opensearch installed on other nodes

+ container_name: opensearch-dashboards

+ ports:

+ - 5601:5601 # Map host port 5601 to container port 5601

+ expose:

+ - "5601" # Expose port 5601 for web access to OpenSearch Dashboards

+ environment:

+ OPENSEARCH_HOSTS: '["https://opensearch:9200"]' # Define the OpenSearch nodes that OpenSearch Dashboards will query

+ depends_on:

+ - opensearch

+ - prometheus

+

# *****

# Tests

# *****

@@ -855,7 +936,7 @@ services:

otelcol:

condition: service_started

healthcheck:

- test: [ "CMD", "wget", "--spider", "localhost:11633" ]

+ test: ["CMD", "wget", "--spider", "localhost:11633"]

interval: 1s

timeout: 3s

retries: 60

diff --git a/local-config.md b/local-config.md

new file mode 100644

index 0000000000..f16744b894

--- /dev/null

+++ b/local-config.md

@@ -0,0 +1,33 @@

+# Local Dev Config

+

+Use the following configuration to locally run and test the OTEL demo:

+

+- For additional help go [here](https://opensearch.org/docs/latest/install-and-configure/install-opensearch/docker/)

+

+### Raise your host's ulimits:

+Raise the upper limits for OpenSearch to be able handling high I/O :

+

+`sudo sysctl -w vm.max_map_count=512000`

+

+### Map domain name to local dns

+

+run the [following script](add_hosts_locally.sh) to map the docker-compose service names to your local dns

+

+```text

+# The hostname you want to associate with the IP address

+

+OPENSEARCH_HOST="opensearch"

+OPENSEARCH_DASHBOARD="opensearch-dashboards"

+OTEL_STORE="frontend"

+OTEL_LOADER="loadgenerator"

+PROMETHEUS="prometheus"

+

+# Add the entry to the /etc/hosts file

+

+echo "$IP $OPENSEARCH_HOST" | sudo tee -a /etc/hosts

+echo "$IP $OPENSEARCH_DASHBOARD" | sudo tee -a /etc/hosts

+echo "$IP $OTEL_STORE" | sudo tee -a /etc/hosts

+echo "$IP $PROMETHEUS" | sudo tee -a /etc/hosts

+echo "$IP $OTEL_LOADER" | sudo tee -a /etc/hosts

+

+```

\ No newline at end of file

diff --git a/src/currencyservice/Dockerfile b/src/currencyservice/Dockerfile

index bb5b998221..a5e8f8718c 100644

--- a/src/currencyservice/Dockerfile

+++ b/src/currencyservice/Dockerfile

@@ -17,6 +17,7 @@

FROM alpine

RUN apk update

+RUN apk add --no-cache curl

RUN apk add git cmake make g++ grpc-dev re2-dev protobuf-dev c-ares-dev

ARG OPENTELEMETRY_CPP_VERSION=1.10.0

diff --git a/src/currencyservice/README.md b/src/currencyservice/README.md

index 27a23f397f..be2951d9ea 100644

--- a/src/currencyservice/README.md

+++ b/src/currencyservice/README.md

@@ -9,7 +9,7 @@ To build the currency service, run the following from root directory

of opentelemetry-demo

```sh

-docker-compose build currencyservice

+docker compose build currencyservice

```

## Run the service

@@ -17,7 +17,7 @@ docker-compose build currencyservice

Execute the below command to run the service.

```sh

-docker-compose up currencyservice

+docker compose up currencyservice

```

## Run the client

diff --git a/src/dataprepper/README.md b/src/dataprepper/README.md

new file mode 100644

index 0000000000..2bc277fa5d

--- /dev/null

+++ b/src/dataprepper/README.md

@@ -0,0 +1,55 @@

+

+

+# What is Data Prepper

+

+[Data Prepper](https://github.com/opensearch-project/data-prepper/blob/main/docs/overview.md) is an open source utility service. Data Prepper is a server side data collector with abilities to filter, enrich, transform, normalize and aggregate data for downstream analytics and visualization. The broader vision for Data Prepper is to enable an end-to-end data analysis life cycle from gathering raw logs to facilitating sophisticated and actionable interactive ad-hoc analyses on the data.

+

+# What is Data Prepper Integration

+

+Data Prepper integration is concerned with the following aspects

+

+- Allow simple and automatic generation of all schematic structured

+ - traces ( including specific fields mapping to map to SS4O schema)

+ - services ( adding support for specific service mapping category)

+ - metrics (using the standard SS4O schema)

+

+- Add Dashboard Assets for correlation between traces-services-metrics

+

+- Add correlation queries to investigate traces based metrics

+

+# Data - Prepper Trace Fields

+Data Prepper uses the following [Traces](https://github.com/opensearch-project/data-prepper/blob/main/docs/schemas/trace-analytics/otel-v1-apm-span-index-template.md) mapping file

+The next fields are used:

+```text

+

+- traceId - A unique identifier for a trace. All spans from the same trace share the same traceId.

+- spanId - A unique identifier for a span within a trace, assigned when the span is created.

+- traceState - Conveys information about request position in multiple distributed tracing graphs.

+- parentSpanId - The spanId of this span's parent span. If this is a root span, then this field must be empty.

+- name - A description of the span's operation.

+- kind - The type of span. See OpenTelemetry - SpanKind.

+- startTime - The start time of the span.

+- endTime - The end time of the span.

+- durationInNanos - Difference in nanoseconds between startTime and endTime.

+- serviceName - Currently derived from the opentelemetry.proto.resource.v1.Resource associated with the span, the resource from the span originates.

+- events - A list of events. See OpenTelemetry - Events.

+- links - A list of linked spans. See OpenTelemetry - Links.

+- droppedAttributesCount - The number of attributes that were discarded.

+- droppedEventsCount - The number of events that were discarded.

+- droppedLinksCount - The number of links that were dropped.

+- span.attributes.* - All span attributes are split into a list of keywords.

+- resource.attributes.* - All resource attributes are split into a list of keywords.

+- status.code - The status of the span. See OpenTelemetry - Status.

+

+```

+There are some additional `trace.group` related fields which are not part of the [OTEL spec](https://github.com/open-telemetry/opentelemetry-specification/blob/main/specification/trace/api.md) for traces

+```text

+- traceGroup - A derived field, the name of the trace's root span.

+- traceGroupFields.endTime - A derived field, the endTime of the trace's root span.

+- traceGroupFields.statusCode - A derived field, the status.code of the trace's root span.

+- traceGroupFields.durationInNanos - A derived field, the durationInNanos of the trace's root span.

+

+```

+### Metrics from Traces Processors

+

+Adding new processors for creating metrics for logs and traces that pass through [Data Prepper](https://opensearch.org/blog/Announcing-Data-Prepper-2.1.0/)

\ No newline at end of file

diff --git a/src/opensearch/data-prepper-config.yaml b/src/dataprepper/data-prepper-config.yaml

similarity index 56%

rename from src/opensearch/data-prepper-config.yaml

rename to src/dataprepper/data-prepper-config.yaml

index 2af22a1b45..e4e63ad948 100644

--- a/src/opensearch/data-prepper-config.yaml

+++ b/src/dataprepper/data-prepper-config.yaml

@@ -1,4 +1,3 @@

-# Copyright The OpenTelemetry Authors

# SPDX-License-Identifier: Apache-2.0

ssl: false

diff --git a/src/dataprepper/dataPrepper.svg b/src/dataprepper/dataPrepper.svg

new file mode 100644

index 0000000000..2e17a10168

--- /dev/null

+++ b/src/dataprepper/dataPrepper.svg

@@ -0,0 +1,8 @@

+

\ No newline at end of file

diff --git a/src/opensearch/pipelines.yaml b/src/dataprepper/pipelines.yaml

similarity index 85%

rename from src/opensearch/pipelines.yaml

rename to src/dataprepper/pipelines.yaml

index 71acd227cb..b9c66a6d41 100644

--- a/src/opensearch/pipelines.yaml

+++ b/src/dataprepper/pipelines.yaml

@@ -7,7 +7,7 @@ demo-pipeline:

ssl: false

sink:

- opensearch:

- hosts: ["http://opensearch:9200"]

+ hosts: ["https://opensearch:9200"]

insecure: true

username: admin

password: admin

diff --git a/src/dataprepper/root-ca.pem b/src/dataprepper/root-ca.pem

new file mode 100644

index 0000000000..4015d866e1

--- /dev/null

+++ b/src/dataprepper/root-ca.pem

@@ -0,0 +1,24 @@

+-----BEGIN CERTIFICATE-----

+MIID/jCCAuagAwIBAgIBATANBgkqhkiG9w0BAQsFADCBjzETMBEGCgmSJomT8ixk

+ARkWA2NvbTEXMBUGCgmSJomT8ixkARkWB2V4YW1wbGUxGTAXBgNVBAoMEEV4YW1w

+bGUgQ29tIEluYy4xITAfBgNVBAsMGEV4YW1wbGUgQ29tIEluYy4gUm9vdCBDQTEh

+MB8GA1UEAwwYRXhhbXBsZSBDb20gSW5jLiBSb290IENBMB4XDTE4MDQyMjAzNDM0

+NloXDTI4MDQxOTAzNDM0NlowgY8xEzARBgoJkiaJk/IsZAEZFgNjb20xFzAVBgoJ

+kiaJk/IsZAEZFgdleGFtcGxlMRkwFwYDVQQKDBBFeGFtcGxlIENvbSBJbmMuMSEw

+HwYDVQQLDBhFeGFtcGxlIENvbSBJbmMuIFJvb3QgQ0ExITAfBgNVBAMMGEV4YW1w

+bGUgQ29tIEluYy4gUm9vdCBDQTCCASIwDQYJKoZIhvcNAQEBBQADggEPADCCAQoC

+ggEBAK/u+GARP5innhpXK0c0q7s1Su1VTEaIgmZr8VWI6S8amf5cU3ktV7WT9SuV

+TsAm2i2A5P+Ctw7iZkfnHWlsC3HhPUcd6mvzGZ4moxnamM7r+a9otRp3owYoGStX

+ylVTQusAjbq9do8CMV4hcBTepCd+0w0v4h6UlXU8xjhj1xeUIz4DKbRgf36q0rv4

+VIX46X72rMJSETKOSxuwLkov1ZOVbfSlPaygXIxqsHVlj1iMkYRbQmaTib6XWHKf

+MibDaqDejOhukkCjzpptGZOPFQ8002UtTTNv1TiaKxkjMQJNwz6jfZ53ws3fh1I0

+RWT6WfM4oeFRFnyFRmc4uYTUgAkCAwEAAaNjMGEwDwYDVR0TAQH/BAUwAwEB/zAf

+BgNVHSMEGDAWgBSSNQzgDx4rRfZNOfN7X6LmEpdAczAdBgNVHQ4EFgQUkjUM4A8e

+K0X2TTnze1+i5hKXQHMwDgYDVR0PAQH/BAQDAgGGMA0GCSqGSIb3DQEBCwUAA4IB

+AQBoQHvwsR34hGO2m8qVR9nQ5Klo5HYPyd6ySKNcT36OZ4AQfaCGsk+SecTi35QF

+RHL3g2qffED4tKR0RBNGQSgiLavmHGCh3YpDupKq2xhhEeS9oBmQzxanFwWFod4T

+nnsG2cCejyR9WXoRzHisw0KJWeuNlwjUdJY0xnn16srm1zL/M/f0PvCyh9HU1mF1

+ivnOSqbDD2Z7JSGyckgKad1Omsg/rr5XYtCeyJeXUPcmpeX6erWJJNTUh6yWC/hY

+G/dFC4xrJhfXwz6Z0ytUygJO32bJG4Np2iGAwvvgI9EfxzEv/KP+FGrJOvQJAq4/

+BU36ZAa80W/8TBnqZTkNnqZV

+-----END CERTIFICATE-----

diff --git a/src/dataprepper/trace_analytics_no_ssl_2x.yml b/src/dataprepper/trace_analytics_no_ssl_2x.yml

new file mode 100644

index 0000000000..889facd5ce

--- /dev/null

+++ b/src/dataprepper/trace_analytics_no_ssl_2x.yml

@@ -0,0 +1,37 @@

+entry-pipeline:

+ delay: "100"

+ source:

+ otel_trace_source:

+ ssl: false

+ sink:

+ - pipeline:

+ name: "raw-pipeline"

+ - pipeline:

+ name: "service-map-pipeline"

+raw-pipeline:

+ source:

+ pipeline:

+ name: "entry-pipeline"

+ processor:

+ - otel_trace_raw:

+ sink:

+ - opensearch:

+ hosts: [ "https://opensearch:9200" ]

+ insecure: true

+ username: "admin"

+ password: "admin"

+ index_type: trace-analytics-raw

+service-map-pipeline:

+ delay: "100"

+ source:

+ pipeline:

+ name: "entry-pipeline"

+ processor:

+ - service_map_stateful:

+ sink:

+ - opensearch:

+ hosts: ["https://opensearch:9200"]

+ insecure: true

+ username: "admin"

+ password: "admin"

+ index_type: trace-analytics-service-map

\ No newline at end of file

diff --git a/src/fluent-bit/README.md b/src/fluent-bit/README.md

new file mode 100644

index 0000000000..ef7371b223

--- /dev/null

+++ b/src/fluent-bit/README.md

@@ -0,0 +1,38 @@

+

+

+## Fluent-bit

+

+Fluent-bit is a lightweight and flexible data collector and forwarder, designed to handle a large volume of log data in real-time.

+It is an open-source projectpart of the Cloud Native Computing Foundation (CNCF). and has gained popularity among developers for simplicity and ease of use.

+

+Fluent-bit is designed to be lightweight, which means that it has a small footprint and can be installed on resource-constrained environments like embedded systems or containers. It is written in C language, making it fast and efficient, and it has a low memory footprint, which allows it to consume minimal system resources.

+

+Fluent-bit is a versatile tool that can collect data from various sources, including files, standard input, syslog, and TCP/UDP sockets. It also supports parsing different log formats like JSON, Apache, and Syslog. Fluent-bit provides a flexible configuration system that allows users to tailor their log collection needs, which makes it easy to adapt to different use cases.

+

+One of the main advantages of Fluent-bit is its ability to forward log data to various destinations, including Opensearch, InfluxDB, and Kafka. Fluent-bit provides multiple output plugins that allow users to route their log data to different destinations based on their requirements. This feature makes Fluent-bit ideal for distributed systems where log data needs to be collected and centralized in a central repository.

+

+Fluent-bit also provides a powerful filtering mechanism that allows users to manipulate log data in real-time. It supports various filter plugins, including record modifiers, parsers, and field extraction. With these filters, users can parse and enrich log data, extract fields, and modify records before sending them to their destination.

+

+## Setting Up Fluent-bit agent

+

+For setting up a fluent-bit agent on Nginx, please follow the next instructions

+

+- Install Fluent-bit on the Nginx server. You can download the latest package from the official Fluent-bit website or use your package manager to install it.

+

+- Once Fluent-bit is installed, create a configuration file named [fluent-bit.conf](fluent-bit.conf) in the /etc/fluent-bit/ directory. Add the following configuration to the file:

+

+Here, we specify the input plugin as tail, set the path to the Nginx access log file, and specify a tag to identify the logs in Fluent-bit. We also set some additional parameters such as memory buffer limit and skipping long lines.

+

+For the output, we use the `opensearch` plugin to send the logs to Opensearch. We specify the Opensearch host, port, and index name.

+

+ - Modify the Opensearch host and port in the configuration file to match your Opensearch installation.

+ - Depending on the system where Fluent Bit is installed:

+ - Start the Fluent-bit service by running the following command:

+

+```text

+sudo systemctl start fluent-bit

+```

+- Verify that Fluent-bit is running by checking its status:

+```text

+sudo systemctl status fluent-bit

+```

diff --git a/src/fluent-bit/fluent-bit.conf b/src/fluent-bit/fluent-bit.conf

new file mode 100644

index 0000000000..9bdba7923b

--- /dev/null

+++ b/src/fluent-bit/fluent-bit.conf

@@ -0,0 +1,36 @@

+[SERVICE]

+ Parsers_File parsers.conf

+ Log_Level info

+ Daemon off

+[INPUT]

+ Name forward

+ Port 24224

+

+[FILTER]

+ Name parser

+ Match nginx.access

+ Key_Name log

+ Parser nginx

+

+[FILTER]

+ Name lua

+ Match nginx.access

+ code function cb_filter(a,b,c)local d={}local e=os.date("!%Y-%m-%dT%H:%M:%S.000Z")d["observedTimestamp"]=e;d["body"]=c.remote.." "..c.host.." "..c.user.." ["..os.date("%d/%b/%Y:%H:%M:%S %z").."] \""..c.method.." "..c.path.." HTTP/1.1\" "..c.code.." "..c.size.." \""..c.referer.."\" \""..c.agent.."\""d["trace_id"]="102981ABCD2901"d["span_id"]="abcdef1010"d["attributes"]={}d["attributes"]["data_stream"]={}d["attributes"]["data_stream"]["dataset"]="nginx.access"d["attributes"]["data_stream"]["namespace"]="production"d["attributes"]["data_stream"]["type"]="logs"d["event"]={}d["event"]["category"]={"web"}d["event"]["name"]="access"d["event"]["domain"]="nginx.access"d["event"]["kind"]="event"d["event"]["result"]="success"d["event"]["type"]={"access"}d["http"]={}d["http"]["request"]={}d["http"]["request"]["method"]=c.method;d["http"]["response"]={}d["http"]["response"]["bytes"]=tonumber(c.size)d["http"]["response"]["status_code"]=c.code;d["http"]["flavor"]="1.1"d["http"]["url"]=c.path;d["communication"]={}d["communication"]["source"]={}d["communication"]["source"]["address"]="127.0.0.1"d["communication"]["source"]["ip"]=c.remote;return 1,b,d end

+ call cb_filter

+

+[OUTPUT]

+ Name opensearch

+ Match nginx.*

+ Host opensearch

+ Port 9200

+ tls On

+ tls.verify Off

+ HTTP_Passwd admin

+ HTTP_User admin

+ Index sso_logs-nginx-prod

+ Generate_ID On

+ Retry_Limit False

+ Suppress_Type_Name On

+[OUTPUT]

+ Name stdout

+ Match nginx.access

\ No newline at end of file

diff --git a/src/fluent-bit/fluentbit.png b/src/fluent-bit/fluentbit.png

new file mode 100644

index 0000000000..770a562a6c

Binary files /dev/null and b/src/fluent-bit/fluentbit.png differ

diff --git a/src/fluent-bit/parsers.conf b/src/fluent-bit/parsers.conf

new file mode 100644

index 0000000000..d9015f210a

--- /dev/null

+++ b/src/fluent-bit/parsers.conf

@@ -0,0 +1,6 @@

+[PARSER]

+ Name nginx

+ Format regex

+ Regex ^(?[^ ]*) (?[^ ]*) (?[^ ]*) \[(?